Let me tell you something most AI consultants won’t: the technology probably isn’t your problem.

You’ve likely been told you need better data infrastructure. Cleaner datasets. More sophisticated models. The latest LLM integration. Maybe you’ve been pitched on platforms that promise to “revolutionize your operations” or “unlock AI-driven insights.”

Here’s what actually happens: you buy the technology, implement the system, and six months later, your teams are still arguing about what a “customer record” actually means.

The Real Barrier Isn’t Technical

I’ve watched organizations invest millions in AI infrastructure only to discover the real barrier was sitting in their conference room the entire time: humans disagree, even when they share definitions.

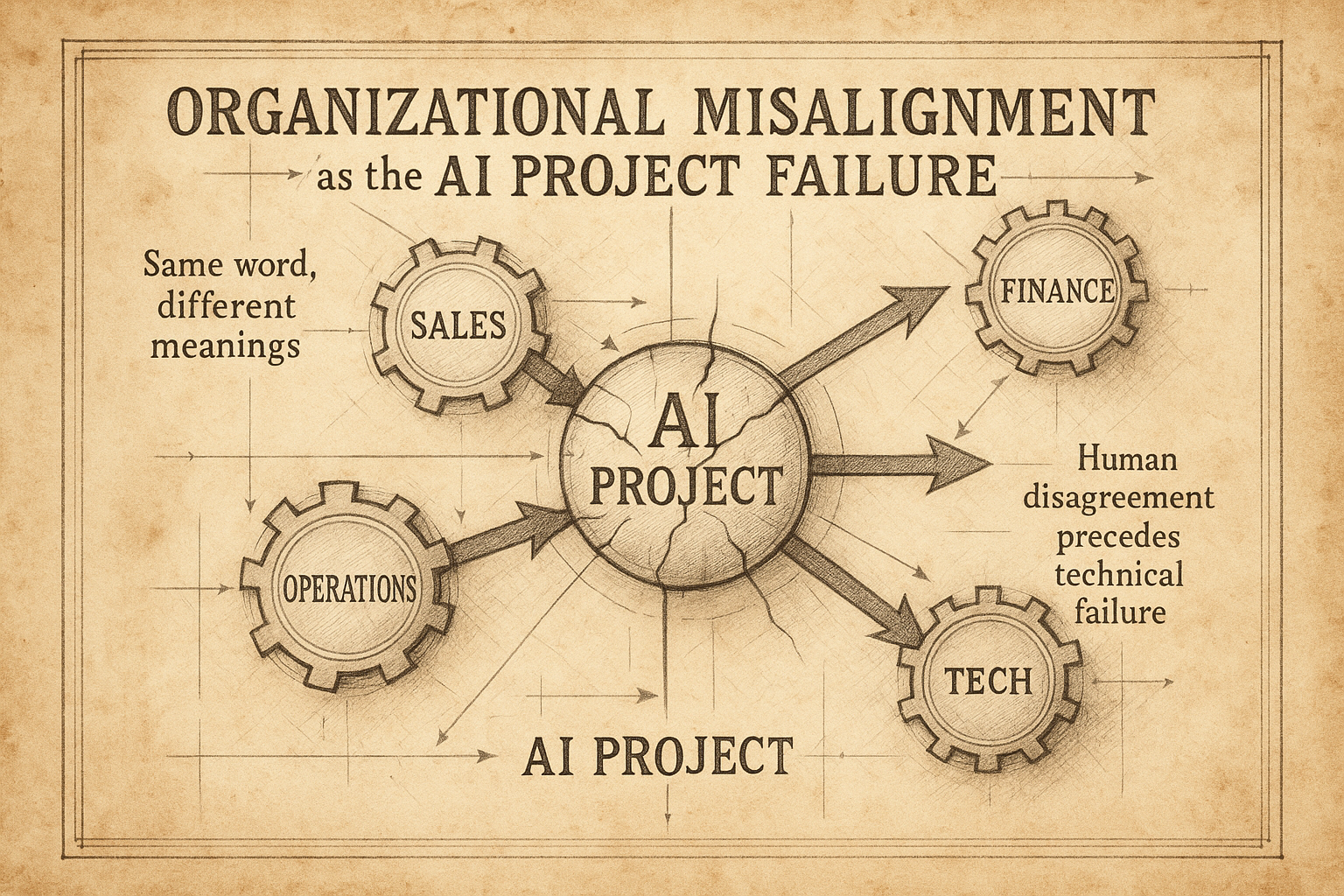

Think about the last time your sales and customer success teams had a meeting. Did they use the term “customer” the same way? When sales says “customer,” they might mean anyone who’s bought once. When customer success says “customer,” they mean active accounts under management. When finance says it, they mean anyone with an open AR balance.

Same word. Three different meanings. No amount of machine learning fixes that.

This is the truth nobody wants to hear: AI doesn’t create clarity. It amplifies whatever clarity (or confusion) already exists in your organization.

Humans Disagree Even When They Share Definitions

Here’s where it gets worse.

Even when you align on definitions, humans still disagree about priorities, scope, and what “success” looks like. I’ve seen leadership teams spend hours in strategy sessions, leave feeling aligned, and then interpret the decisions completely differently when they return to their departments.

One executive hears “prioritize customer retention.” They interpret that as investing in new CRM capabilities.

Another hears the same thing. They interpret it as expanding the customer success team.

A third sees it as a signal to tighten onboarding processes.

All three are reasonable interpretations. All three lead to different resource allocation. All three would require different AI implementations.

When you automate before you align, you don’t get efficiency. You get automated confusion.

The “Customer Record” Problem

Let me give you a concrete example.

You decide to implement an AI system to improve customer insights. Smart move. Your data team starts pulling together customer records from different systems: CRM, billing, support tickets, product usage.

But what is a “customer record,” exactly?

- Marketing thinks it’s anyone who’s ever engaged with content

- Sales thinks it’s anyone who’s had a discovery call

- Finance thinks it’s anyone who’s been invoiced

- Support thinks it’s anyone who’s submitted a ticket

- Product thinks it’s anyone with an active login

You try to create a unified view. The AI model trains on… what, exactly? A merged dataset where “customer” means six different things depending on which system contributed the record?

Now your AI is making recommendations based on a fundamentally incoherent dataset. Not because the technology failed. Because the humans never agreed what they were measuring.

Why AI Amplifies Confusion (or Clarity)

Here’s the pattern I see repeatedly:

Scenario 1: Organization with Existing Alignment

- Clear definitions across teams

- Agreement on priorities and trade-offs

- Shared mental models of how the business works

- AI Result: Amplifies their strategic advantage. Automation actually works because it’s automating coherent processes.

Scenario 2: Organization Without Alignment

- Departments using same terms differently

- Competing interpretations of strategic priorities

- No shared understanding of customer journey

- AI Result: Amplifies the confusion. Now you have automated processes executing contradictory logic at scale.

The technology isn’t the differentiator. Organizational clarity is.

AI is a mirror. If your organization is strategically coherent, AI will reflect that coherence back at you with increased efficiency. If your organization is fragmented, AI will reflect that fragmentation back, just faster and at greater scale.

What Alignment Looks Like Before Automation

So what does it actually look like to align before you automate?

It’s not about getting everyone to agree on everything. That’s impossible and probably not desirable. It’s about making disagreements explicit and resolvable.

Here’s what pre-automation alignment requires:

1. Shared Ontology

Not just definitions, but a shared understanding of how concepts relate to each other. When someone says “customer lifecycle,” does everyone picture the same stages, same transitions, same meaningful events?

2. Explicit Trade-Offs

When priorities conflict (and they will), is there a clear framework for resolving them? If “speed to market” conflicts with “technical excellence,” how does your organization decide?

3. Resolvable Disagreement Patterns

You don’t need universal agreement. You need mechanisms for resolving disagreement. What happens when sales and product disagree about a feature request? Is there a process, or does it become a political battle?

4. Strategic Coherence

Can your leadership team articulate (in writing) what your organization optimizes for? Not in vague mission statement language, but in concrete terms that guide implementation decisions?

This is the work that has to happen before AI delivers value.

You can’t automate your way out of organizational incoherence. You can only automate what’s already coherent.

The Path Forward

If you’ve recognized your organization in this article, you’re not alone. Most companies operate with more confusion than they’d like to admit. The AI hype makes it worse because vendors want you to believe technology solves everything.

It doesn’t.

Here’s what actually works:

Start with discovery, not deployment. Before you implement AI, invest in understanding where your organization actually has clarity and where it’s operating on implicit assumptions that may not be shared.

Make mental models explicit. The conversations you’ve been avoiding, the ones where you’d have to admit your teams don’t share the same understanding of core concepts, those are the conversations that determine whether AI helps or amplifies chaos.

Align ontology before automation. Get clear on what things mean, how they relate, and what you’re optimizing for. This isn’t philosophical indulgence. It’s the foundation that makes implementation coherent.

Build tools YOUR business needs. Not one-size-fits-all platforms that force you to adapt to their mental models. Custom solutions that reflect your actual strategic priorities.

Technology changes. Being human, with all our disagreement and misalignment, doesn’t. The companies that succeed with AI aren’t the ones with the best models. They’re the ones who’ve done the hard work of aligning before they automate.

What This Means for You

If you’re reading this and thinking, “We need to talk about this,” you’re probably right.

We use a process called Discovery to help organizations surface exactly these kinds of hidden misalignments. It’s a 60-minute conversation that produces a written Clarity Document, crystallizing where your organization has strategic coherence and where the disagreements are preventing AI from delivering value.

No obligation. No pressure. You get clarity whether we work together or not.

Because honestly? The technology is the easy part. The human alignment is where the real work happens, and where the real strategic advantage lies.

Ready to surface the hidden misalignments in your organization? Schedule a Discovery Call → 60-minute conversation, Clarity Document within 48 hours. No obligation.