There’s a question that keeps coming up in our work with AI systems, one that sounds almost too simple to be profound: What if we could provide input so precisely there’s only one correct prediction?

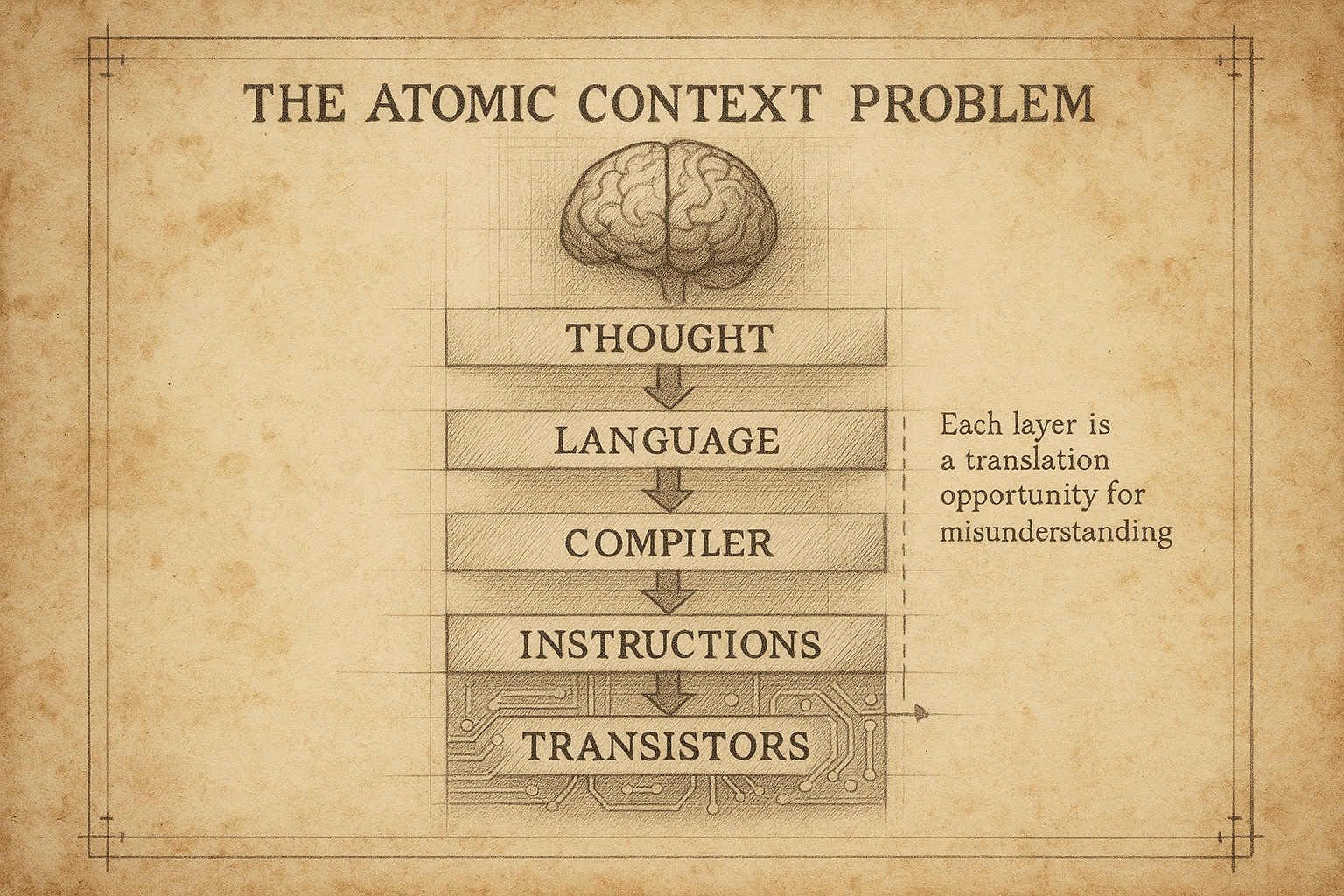

That question leads somewhere deeper than most AI conversations go. It takes us from the surface promise of “better prompts” down through layers of abstraction, all the way to the fundamental challenge of translating human intention into machine execution.

We call this the Atomic Context Problem. And understanding it changes how you think about AI entirely.

The Precision Paradox

Large Language Models predict the next token based on context. Give them vague context, get vague predictions. Give them precise context, get precise predictions. Straightforward enough.

But here’s where it gets interesting: what does “precise context” actually mean when you’re trying to translate strategic business intent into executable software?

The answer isn’t just about writing better prompts. It’s about recognizing that every layer of abstraction between your thought and the transistors executing it is a translation opportunity for misunderstanding.

And we’ve been living with this problem since long before AI entered the conversation.

A Brief History of Abstraction Layers

In the early days of software development, you wrote closer to the metal. Assembly language meant you controlled registers directly. You knew exactly what the machine was doing because the gap between your code and the hardware was minimal.

Then we added layers.

High-level languages gave us productivity. Compilers translated human-readable code into machine instructions. Frameworks abstracted common patterns. Libraries encapsulated complexity. Each layer made development faster, more accessible, more powerful.

But each layer also introduced translation risk.

When you write user.save() in a modern framework, you’re not just storing data. You’re invoking a cascade of translations: ORM interpretation, SQL generation, database driver communication, transaction management, connection pooling. Your simple intention (“save this user”) passes through a dozen interpreters before reaching the hardware.

Most of the time, this works beautifully. The abstractions hold. Your intent translates correctly.

Until it doesn’t.

The Early Feedback Loop

Here’s what made traditional coding powerful, even with all those abstraction layers: the feedback loop was tight.

You wrote code. You ran it. You saw what broke. You fixed it.

The computer didn’t guess at your intent. It executed your instructions literally, exposing misunderstandings immediately. Stack traces pointed to exactly where your mental model diverged from reality.

This brutal honesty was a feature, not a bug. Every compilation error, every runtime exception, every logical mistake surfaced quickly. The machine forced you to be precise because it had no capacity for interpretation.

You learned to think in terms the machine could execute. Not because you became less human, but because you developed a disciplined way of translating intention into instruction.

Then AI Complicated Things

Large Language Models changed the game fundamentally. Instead of requiring perfectly precise syntax, they work with natural language. Instead of executing literally, they predict probabilistically. Instead of exposing misunderstandings immediately, they generate plausible-sounding responses that might or might not align with your actual intent.

The feedback loop lengthened. The translation layers multiplied. The opportunities for misalignment exploded.

And here’s the uncomfortable truth: most organizations are using AI the way novice programmers use frameworks — invoking powerful abstractions without understanding what’s happening underneath.

You prompt an LLM. It generates code. You run that code. Maybe it works. Maybe it doesn’t. Maybe it seems to work but carries subtle misalignments that won’t surface until production.

The precision that compilation errors used to enforce? Gone. The immediate feedback that runtime exceptions provided? Delayed. The tight loop between intention and execution? Stretched across layers of probabilistic interpretation.

What Atomic Context Engineering Means

Atomic Context Engineering is our response to this problem. Not a silver bullet. Not a simple fix. A systematic approach to managing translation risk across abstraction layers.

The core idea: treat context as a first-class engineering concern.

Just as you version control code, test coverage, and deployment configurations, you need to engineer the context you provide to AI systems with the same rigor.

This means:

Decomposing problems into atomic units where “atomic” means the context is sufficiently complete that there’s minimal room for misinterpretation. Not because the AI can’t handle ambiguity, but because ambiguity is where strategic intent gets lost in translation.

Mapping abstraction layers explicitly so you understand each translation step between intention and execution. What goes into the LLM? What comes out? How does that output feed into the next layer? Where are the interpretation gaps?

Building verification loops that surface misalignments early, the way compilation errors used to. Not just testing outputs, but validating that the AI’s interpretation matches your actual intent.

Creating context primitives that can be composed reliably. Small, well-defined units of context that maintain their meaning across translations, the way well-designed functions maintain their contracts across abstraction layers.

This isn’t about constraining AI or reducing it to mechanical execution. It’s about amplifying human intent through systematic precision.

From Theory to Practice

Let’s make this concrete.

Say you’re building a system to analyze customer feedback. Traditional approach: write a prompt like “Analyze this feedback for sentiment and key themes.”

That works. Sort of. You get results. They’re probably useful. But what did the AI actually optimize for? What patterns did it prioritize? How did it handle edge cases? What assumptions did it make about what “key themes” means in your business context?

You won’t know until you’ve processed thousands of records and someone notices that the system categorizes urgent technical issues under “product feedback” instead of routing them to support.

The Atomic Context approach looks different.

You start by decomposing: What’s the purpose of this analysis? Who consumes the results? What decisions will they make? What context from your business domain does the AI need to interpret “urgent” correctly?

You map the abstraction layers: The feedback text is raw input. The AI’s analysis is an interpretation layer. The categorization is another abstraction. The routing decision is yet another. Each layer is a translation opportunity.

You build verification: Not just “did it categorize correctly?” but “did it interpret urgency the way our domain experts would?” You create test cases that expose misalignment early.

You develop context primitives: Reusable definitions of what “urgent” means in your business, what “technical issue” encompasses, how to weight customer tenure against issue severity.

Now you’re not just using AI. You’re engineering the context in which AI operates.

The Philosophical Foundation

This is where philosophy meets practice.

Most AI discussions focus on capabilities: what models can do, how to scale them, where to apply them. Atomic Context Engineering starts with ontology: what exists in your domain, how concepts relate, what distinctions matter.

We’ve learned this through building actual systems. When you try to use AI for strategic work, you quickly discover that vague context produces vague results, no matter how powerful the model.

The solution isn’t to constrain the AI’s creativity. It’s to provide context so precise that the AI’s probabilistic predictions align with your deterministic needs.

Think of it this way: a compiler doesn’t reduce your expressiveness. It enforces precision that makes your intentions executable. Atomic Context Engineering does the same for AI — it creates the disciplined structure that lets you translate strategic thinking into reliable automation.

Why This Matters for Your Business

The Atomic Context Problem isn’t an academic concern. It’s the reason most AI implementations deliver inconsistent value.

You’ve probably seen it: A promising proof of concept that falls apart at scale. An AI system that works brilliantly in demos but struggles with real-world edge cases. Automation that saves time on simple tasks but requires constant correction on anything nuanced.

These aren’t AI failures. They’re context engineering failures.

The companies getting real value from AI aren’t just using better models or writing better prompts. They’re treating context as an engineering discipline — systematically managing the translation from strategic intent through layers of abstraction to executable automation.

They’re building systems where human judgment amplifies AI capability, rather than fighting against probabilistic drift.

They’re developing the organizational muscle to think precisely about problems, not because precision limits creativity, but because disciplined thinking is what makes sophisticated capability useful.

The Path Forward

We’ve been developing Atomic Context Engineering through years of implementation work. Building agent teams. Designing Discovery processes. Creating frameworks that translate philosophical depth into practical execution.

The approach is codified in CEDAR — our Context Engineering for Development Automation Reliability framework. It’s not just theory. It’s what we use internally to build systems that actually work.

If you’re wrestling with AI implementation that delivers inconsistent results, or if you’re trying to move from promising demos to production reliability, the problem is probably context engineering.

Not your models. Not your prompts. Not your technical architecture.

Your ability to translate strategic intent into atomic context that AI systems can work with reliably.

We’ve written extensively about this in our white paper on CEDAR. It details the ontological foundations, the decomposition methodology, the verification patterns we’ve developed through actual implementation.

Because technology changes. But the challenge of translating human intention into machine execution? That’s as old as computing itself.

The only difference now is that AI has made the stakes higher and the feedback loops longer.

Atomic Context Engineering is how you tighten that loop again.

Ready to see the framework in detail?

Download CEDAR White Paper → Ontological approach to AI strategy — philosophical foundations meet implementation patterns

Want to explore how this applies to your organization?

Schedule Discovery Call → 60-minute conversation, Clarity Document within 48 hours. No obligation.